CPR for a Docker container

Background

Picture this: You're experimenting with a Docker container, enable a

feature, and suddenly your container is stuck in an endless restart

loop. In a panic, you run docker compose down and watch everything

disappear.

What You Should Do Instead

Don't lose the container, no matter how bad it is. Create a new

image from the container using docker commit: docker commit

<container-id> broken-image (you get container id using docker ps

-a command)

From here there are a couple of things you could do. I was using

Dockerfile to build and setup things. Modify it to use broken image

as base and install the missing dependencies:

FROM broken-image:latest

RUN pip install <package-name>

And with this in place I can restart the docker compose stack.

Complicated changes

In case damage to your container is more severe there will be additional steps to revive the container.

- Create image from your broken container:

docker commit <container-id> broken-image - Create a new container from this broken image with a different

entrypointcommand (default command would restart the broken stack):docker run -it --entrypoint=/bin/bash broken-image:latest - Adjust or remove your changes, install missing dependencies, run migrations, what have you

- Create a new image from this container that has all the fixes:

docker commit <container-id-with-bash-running> fixed-image -

Start a new container using this

fixed-imageand default command that brings up your original stack. In my case I removedbuildstep from mydocker-compose.ymlfile and pointed it to the new image:app: image: fixed-image:latest - Be careful with running faulty containers. I was really confused

balancing

docker composecommands and directdockercommands. I noticed thatdocker compose(re)start command would also (re)start all broken/existing containers and I had to manually remove them to be able to get the complete stack running again.

With this approach I was no longer building a new Docker

image. Instead I used docker compose to start container using

fixed-image and default command.

Summary:

- Always test changes in development first

- Use

docker commitwith your container before making risky changes

The right recipe

A colleague reached out to me for a pairing session on his project. I explained upfront that my hourly rate far exceeds most LLM subscription costs. He understood — while LLMs have their place, he needed help clarifying requirements and making real progress.

His existing setup: several machines pushing data to cloud, a looker studio dashboard connected to a placeholder google spreadsheet. To start, he wanted to make this dashboard live.

His requirements:

- A live dashboard

- Python based: his comfort zone

- Daily data pulls: sufficient for non-temporal data

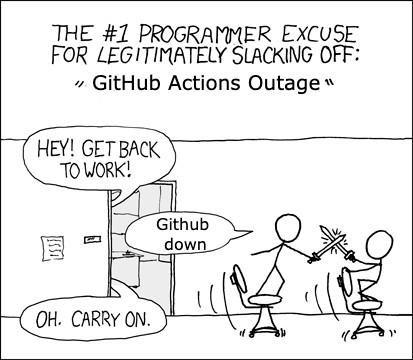

The solution was obvious, I have used it before: A GitHub action that runs daily, pulls cloud data and updates his spreadsheet — instantly making his dashboard live. I understand that I am contributing to the Github actions phenomena:

The trickiest parts? Wrestling with Google's Sheets API authentication and configuring GitHub secrets properly. But once those pieces clicked, everything flowed. Four one hour focused pairing sessions, that's it. He got a working solution plus he understood all the moving parts to continue experimenting on his own.

Four hours, serverless, an elegant solution. This is what going back to first principles looks like.

Setting Up Zephyr for ESP32: A Blinky LED Journey

I recently started working with a client on an ESP32 based project. Having prior experience with Zephyr on Nordic boards, I decided to leverage Zephyr's Espressif support for this project. While the documentation mentions the classic "blinky" example, I noticed it lacked the necessary device overlay file for my specific board.

Finding the Right Example

Fortunately, I discovered that the "PWM Blinky" example included sample overlay files I could reference. After compiling the example, I encountered this error when attempting to flash the device:

([Errno 5] could not open port /dev/ttyUSB0: [Errno 5] Input/output error: '/dev/ttyUSB0')

Running stat /dev/ttyUSB0 revealed that the device belonged to the

dialout group. I fixed the permission issue by adding my user to

this group:

sudo usermod -a -G dialout $USER

After rebooting to apply the new permissions, west flash worked perfectly.

Creating the missing overlay file

The basic Blinky documentation explains the need for an overlay file

that points to the correct GPIO pin. Based on the PWM Blinky example,

I discovered my board (esp32_devkitc_wroom) has an onboard blue LED

connected to pwm_led_gpio0_2. I created following overlay file:

/ {

aliases {

led0 = &myled0;

};

leds {

compatible = "gpio-leds";

myled0: led_0 {

gpios = <&gpio0 2 GPIO_ACTIVE_LOW>;

};

};

};

Success

With the overlay in place(samples/basic/blinky/boards/esp32_devkitc_wroom_procpu.overlay),

I compiled the project and it worked:

west build -p always -b esp32_devkitc_wroom/esp32/procpu samples/basic/blinky

After flashing the board, there it was, blinking blue LED - a small victory that is still the first step and I keep revisiting it.

Next up: implementing the project's actual functionality, including WiFi provisioning, ePaper display integration, and LED ring controls.

Resolution / सलटारा

Continuing from the last post. The fact that Python interpreter didn't

catch the regex pattern in tests but threw compile error on staging

environment was very unsettling. Personally I knew that I am missing

something on my part and I was very reluctant on blaming the language

itself. Turns out, I was correct 🙈.

I was looking for early feedback and comments on the post and Punch was totally miffed by this inconsistency and specially the conclusion I was coming to

Umm, I'm not sure I'm happy with the suggestion that I should check every regex I write, with an external website tool to make sure the regex itself is a valid one. "Python interpreter ka kaam main karoon abhi?" :frown:

He quickly compared the behavior between JS(compiler complianed) and

Python(did nothing). Now that I had full attention from him there was

no more revisiting this at some later stage. We started digging. He

confirmed that the regex failed with Python2 but not with

Python3.11 with a simple command

docker run -it python:3.11 python -c 'import re; p = r"\s*+"; re.compile(p); print("yo")'

I followed up on this and that was the mistake on my part. My local

python setup was 3.11, I was using this to run my tests and staging

environment was using 3.10. When I ran my tests within containerized

setup, similar to what we used on staging, Python interpreter rightly

caught faulty regex:

re.error: multiple repeat at position 11

Something changed between python 3.11 and 3.10 release. I looked

at the release logs and noticed this new feature atomic grouping and

possessive quantifier. I am not sure how to use it or what it does

1. But with this feature, python 3.11 regex pattern: r"\s*+" is a

valid one. I was using this to run tests locally. On staging we had

python 3.10, and with it interpreter threw an error.

Lesson learned:

I was testing things by running things locally. Don't do that. Setup a stack and get is as close to the staging and production as possible. And always, always run tests within it.

You pull an end of a mingled yarn,

to sort it out,

looking for a resolution but there is none,

it is just layers and layers.

Assumptions / घारणा

In the team I started working with recently, I am advocating for code reviews, best practices, tests and using CI/CD. I feel in Python ecosystem it is hard to push reliable code without these practices.

I was assigned an issue to find features from text and had to extract money figures. I started searching for existing libraries (humanize, spacy, numerize, advertools, price-parser). These libraries always hit an edge case with my requirements. I drafted an OpenAI prompt and got a decent regex pattern that covered most of my requirements. I made a few improvements to the pattern and wrote unit and integration tests to confirm that the logic was covering everything I wanted. So far so good. I got the PR approved, merged and deployed. Only to find that the code didn't work and it was breaking on staging environment.

As the prevailing wisdom goes around regular expressions based on 1997 chestnut

Some people, when confronted with a problem, think "I know, I'll use regular expressions." Now they have two problems.

I have avoided regular expressions, and here I am.

I was getting following stacktrace:

File "/lib/number_parser.py", line 19, in extract_numbers

pattern = re.compile("|".join(monetary_patterns))

File "/usr/local/lib/python3.10/re.py", line 251, in compile

return _compile(pattern, flags)

File "/usr/local/lib/python3.10/re.py", line 303, in _compile

p = sre_compile.compile(pattern, flags)

File "/usr/local/lib/python3.10/sre_compile.py", line 788, in compile

p = sre_parse.parse(p, flags)

File "/usr/local/lib/python3.10/sre_parse.py", line 955, in parse

p = _parse_sub(source, state, flags & SRE_FLAG_VERBOSE, 0)

File "/usr/local/lib/python3.10/sre_parse.py", line 444, in _parse_sub

itemsappend(_parse(source, state, verbose, nested + 1,

File "/usr/local/lib/python3.10/sre_parse.py", line 672, in _parse

raise source.error("multiple repeat",

re.error: multiple repeat at position 11

Very confusing. Seems there was an issue with my regex pattern. But

the logic worked, I tested it. The pattern would fail for a certain

type of input and work for others. What gives? I shared the regex

pattern with a colleague and he promptly identified the issue, there

was a redundant + in my pattern. I wanted to look for empty spaces

and I had used a wrong pattern r'\s*+'.

I understand that Python is a interpreted and dynamically typed and that's why I wrote those tests(unit AND integration), containerized the application to avoid the wat. And here I was, despite all the measures, preaching best practices and still facing such a bug for the first time. I assumed that interpreter will do its job and complain about the buggy regex pattern and my tests would fail. Thanks to Punch we further dug into this behavior here.

A friend of mine, Tejaa had shared a regex resource:

https://regex-vis.com/, it is a visual representation (state diagram

of sorts) of the grammar. I tested my faulty regex pattern with \s*+

and the site reported: Error: nothing to repeat. This is better,

error is similar with what I was noticing in my stack trace. I also

tested the fixed pattern and the site showed a correct representation

of what I wanted.

Always confirm your assumptions.

assumption is the mother of all mistakes (fuckups)

Pragmatic Zen of Python

2022....

Here is a wishlist for 2022. I have made similar lists in past, but privately. I didn't do well on following up on them. I want to change that. I will keep the list small and try to make them specific.

-

Read More

I didn't read much in 2021. I have three books right now with me: Selfish Gene by Richard dawkins, Snow Crash by Neal Stephenson, Release It! by Michael T. Nygard. I want to finish them. And possibly add couple more to this list. Last year, I got good feedback on how to improve and grow as a programmer. Keeping that in mind I will pick up some/a technical book. I have never tried before. Lets see how that goes.

-

Monitoring air quality data of Bikaner

When I was in Delhi back in 2014 I was really miffed by deteriorating air quality in Delhi. There was a number attached to it, AQI, it was registered by sensors in different locations. I felt it didn't reflect the real number of air quality for commuters like me. I would daily commute from office on foot and I would see lot of other folks stuck in traffic breathing in the same pollution. I tried to do something back then, didn't get too much momentum and stopped.

Recently with help of Shiv I am mentally getting ready to pick it up, again. In Bikaner we don't have official, public AQI number (1, 2, 3). I would like that to change. I want to start small, couple of boxes that could start collecting data. And add more features to it and increase the fleet to cover more areas in the City.

-

Write More:

I was really bad with writing things in 2021. Fix it. I want to write 4 to 5 good, thought out, well written posts. I also have lot of drafts, I want to finish writing them. I think being more consistent with writing club on wednesdays would be helpful.

-

Personal Archiving:

Again a long time simmering project. I want to put together scaffolding that can hold this. I recently read this post, the author talks about different services he runs on his server. I am getting a bit familiar with containers,

docker-compose, ansible. And this post has given me some new inspirations on taking some small steps. I think the target around this project are still vague and not specific. I want some room here. To experiment, get comfortable, try existing tools.

Review of AI-ML Workshop

In this post I am reflecting on a Artificial language, Machine learning workshop we conducted at NMD, what worked, what didn't work, and how to prepare better. We(Nandeep and I) have been visiting NID from past few years. We are called when students from NMD are doing their Diploma project and need technical guidance with their project ideas. We noticed that because of time constraints often students didn't understand the core concepts of the tools (algorithm, library, software) they would use. Many students didn't have programming background but their projects needed basic, if not advanced skill sets to get a simple demo working. As we wrap up we would always reflect on the work done from students, how they fared and wished we had longer time to dig deeper. Eventually that happened, we got a chance to conduct a two week workshop on Artificial Intelligence and Machine Learning in 2021.

What did we plan:

We knew that students will be from broad spectrum of backgrounds: media (journalism, digital, print), industrial design, architecture, fashion and engineering. All of them had their own systems(laptops), with Windows(7 or 10) or MacOS. We were initially told that it would be five days workshop. We managed to spread it across 2 weeks with half a day of workshop everyday. We planned following rough structure for the workshop:

- Brief introduction to the subject and tools we would like to use.

- Basic concepts of programming, Jupyter Notebooks, their features and limitations.🔖

- Handling Data: reading, changing, visualizing, standard operations.🔖

- Introduction to concepts of Machine learning. Algorithms, data-sets, models, training, identifying features.

- Working with different examples and applications, face recognition, speech to text, etc.

How did the workshop go, observations:

After I reached campus I got more information on logistics around the workshop. We were to run the workshop for two weeks, for complete day. We scrambled to make adjustments. We were happy that we had more time in hand. But with time came more expectations and we were under prepared. We decided to add assignments, readings(papers, articles) and possibly a small project by every student to the workshop schedule.

On first day after introduction, Nandeep started with Blockly and concepts of programming in Python. In second half of day we did a session around student's expectations from the workshop. We ended the day with small introduction around data visualization<link to gurman's post on Indian census and observation on spikes on 5 and 10 year age groups>. For assignment we asked everyone to document a good information visualization that they had liked and how it helped improve their understanding of the problem.

For Second day we covered basics of Python programming. I was hosting Jupyter hub for the class on my system, session was hands on and all the students were asked to follow what we were doing, experiment around and ask questions/doubts. It was a slow start, it is hard to introduce abstract concepts of programming and relating them to applications in AI/ML domain. In second half we did a reading of chapter from Society of Mind<add-link-here> followed it with group discussion. We didn't follow up on first day's assignment which we should have done.

On third day we tried to pick pace to get students closer to applications of AI/ML. We started with concepts of Lists, Arrays, slicing of arrays leading up to how an image is represented in Array. By lunch time we were able to walk everyone through the process of cropping on a face in the image using concepts of array slicing. In every photo editing app this is a basic feature but we felt it was a nice journey for the students on making sense of how it is done, behind the scene. In afternoon session we continued with more image filters, what are algorithms behind them. PROBLEM: We had hard time explaining why imshow by default would show gray images also colored. We finished the day by assigning all students random image processing algorithms from scikit-learn. Task was they would try them and understand how they worked. By this time we started setting up things on student's computer so that they could experiment with things on their own. Nandeep had a busy afternoon setting up development environment on everyone's laptop.

Next day, fourth day, for the first half, students were asked to talk about the algorithm they were assigned, demo it, explain them to other students. Idea was to get everyone comfortable with reading code, understand what is needed for doing more complex tasks like face detection, recognition, object detection etc. In afternoon session we picked up "Audio". Nandeep introduced them to LibROSA. He walked them through on playing a beat<monotone?> on their system. How they could load any audio file and mix them up, create effects etc. At this point some students were still finishing up with third days assignment while others were experimenting with Audio library. Things got fragmented. Meanwhile in parallel we kept answering questions from students, resolve dependencies for their development setup. For assignment we gave every student list of musical instruments and asked them to analyse them, identify their unique features, how to compare these audios to each other.

On fifth day we picked up text and make computer understand the text. We introduced them to concepts like features, classification. We used NLTK library. We showed them how to create simple Naive Bayes text classification. We created a simple dataset, label it, we created a data pipeline to process the data, clean it up, extract feature and "train" the classifier. We were covering things that are fundamentals of Machine learning. For weekend we gave them an assignment on text summarizing. We gave them pointers on existing library and how they work. There are different algorithms. Task was to experiment with these algorithm, what were their limitations. Can they think of something that could improve them? Can they try to implement their ideas.

WEEK 1 ENDED HERE

We were not keen on mandatory student attendance and participation. This open structure didn't give us good control. Students would be discussing things, sharing their ideas, helping each other with debugging. We wanted that to happen but we were not able to balance student collaboration, peer to peer learning and introducing new and more complicated concepts/examples.

Over the weekend I chose a library that could help us introduce basic concepts of computer vision, face detection and face recognition. BUT I didn't factor in how to set it up on Windows system. The library depended on DLib. In morning session we introduced concept of Haar cascade (I wanted to include a reading session around the paper). We showed them a demo of how it worked. In afternoon students were given time to try things themselves, ask questions. Nandeep particularly had a hard time setting up the library on students system. Couple of student followed up on the weekend project. They had fixed a bug in a library to make it work with Hindi.

On Tuesday we introduced them to Speech recognition and explained some core concepts. We setup a demo around Mozilla Deep Search. The web microphone demo doesn't work quite that well in open conversation scenario. There was lot of cross talking and further my accent was not helpful. The example we showed was Web based so we also talked about web application, cloud computing, client-server model. Afternoon was again an open conversation on the topic and students were encouraged to try things by themselves.

On Wednesday we covered different AI/ML components that powers modern smart home devices like Alexa, Google Home, Siri. We broke down what it take for Alexa to tell a joke when asked to. What are onboard systems and the cloud components of such a device. The cycle starts with mics on the device that are always listening for Voice activity detection. Once they get activated they would record audio, stream it to cloud to get text from the speech. Further intent classification is done using NLU, searching for answer and finally we the consumer gets the result. We showed them different libraries, programs, third-party solutions that can be used to create something similar on their own.

We continued the discussion next day on how to run these programs on their own. We stepped away from Jupyter and showed how to run python scripts. Based on earlier lesson around face recognition some students were trying to understand how to detect emotions from a face. This was a nice project. We walked the students on how to search for existing project, paper on the same. We found a well maintained Github project. We followed its README they maintainer already had a trained model. We were able to move quickly and get it working. I felt this was a great exercise. We were able to move quickly and build on top of existing examples. In afternoon we did a reading on Language and Machines section of this blog:

Let's not forget that what has allowed us to create the simultaneously beloved and hated artificial intelligence systems during the last decade, have been advancements in computing, programming languages and mathematics, all of those branches of thought derived from conscious manipulation of language. Even if they may present scary and dystopic consequences, intelligent artificial systems will very rapidly make the quality of our lives better, just as the invention of the wheel, iron tools, written language, antibiotics, factories, vehicles, airplanes and computers. As technology evolves, our conceptions of languages and their meanings should evolve with it.

On last day we reviewed some of the things we covered. Got feedback from students. We talked about how we have improvised the workshop based on inputs from students and Jignesh. We needed to prepare better. Students wished they were more regular and had more time to learn. I don't think we will ever had enough time, this would always be hard. Some students still wanted us to cover more things. Someone asked us to follow up on handling data and info visualization. We had talked briefly about it on day one. So we resumed with that, walked them through with the exercise fetching raw data, cleaning it, plotting and finding stories hidden in them.

Resolve inconsistent behaviour of a Relay with an ESP32

I have worked with different popular IoT boards, arduino, esp32, edison<links>, RaspberryPi. Sometimes trying things myself, other times helping others. I can figure out things on the code side of a project but often I will get stuck debugging the hardware. This has specially blocked me from doing anything beyond basic hello world examples.

A couple of months ago, I picked up an esp32 again. I was able to source some components from a local shop. ESP32, Battery, a charging IC, a Relay, LEDs different resistors and jumper cables. I started off with the simple LED blinking example. I Got that working fairly quickly. Using examples I was able to connect ESP32 to a wifi and control the LED via a static web-page<link-to-example>. Everything was working, documentation, hardware, examples. This was promising and I was getting excited.

Next, a friend of mine, Shiv, he is good with putting together electrical components, brought an LED light bulb. And we thought, lets control it remotely with esp32. We referred to Relay switch connections, connected jumper cables and confirmed that with esp32 the relay LED light bulb would flip when we controlled the light over wifi. It was not working consistently, but it was working to a certain level. Shiv quickly bundled everything inside the bulb. He connected power supply to charging IC and powered the ESP32. We connected relay properly. Everything was neat, clean, packed and ready to go. We plugged in the Bulb and waited for ESP32 to connect to Wifi. It was on, I was able to refresh the webpage that controlled the light/led. So far so good. We tried switching on the LED bulb, nothing. We tried couple of times, nothing. On the webpage I could see that state of light was toggling. I didn't have access to serial monitor. I could not tell if everything on ESP32 was working. And I thought to myself, sigh, here we go again.

We disassembled everything, laid all components flat on the table. I connected ESP32 to my system with USB cable. Shiv got a multimeter. We confirmed that pins we were connecting to were becoming HIGH and LOW. There was a LED on Relay, it was flipping correctly. We also heard a click sound in Relay when we toggled the state. And still the behaviour of LED light was not consistent. Either it won't turn on. Or if it turned on it won't turn off. Rebooting ESP32 would fix things briefly and after couple of iterations it would be completely bricked. In logs everything was good, consistent the way it should be. But it was not. I gave up and left.

Shiv on the other hand kept trying different things. He noticed that although the PIN we connect to would in theory go HIGH and LOW. But the LOW didn't mean 0. He was still getting some measurement even when ping was LOW. He added resistors between the ESP32 pin and Relay input. It still didn't bring the LOW to zero. He read a bit more. AND he added a LED between ESP32 and Relay: Voilà.

LED was perfect. It was behaving as a switch. It takes that 3.3V and use it. Anything less, which is what we had when we put ESP32 pin to LOW, LED would eat it up and not let anything pass through. And connected on other end, Relay started blinking, clicking happily. What a nice find. Shiv again packed everything together. When we met again the next day he showed me the bulb and said, "want to try?". I was skeptical, I opened the webpage, clicked on "ON" and the bulb turned on. Off, the bulb was off. I clicked hurriedly to break it. It didn't break. It kept working, consistently, every single time.

Challenges involved in archiving a webpage

Last year as I was picking up on ideas around building a personal archival system, I put together small utility that would download and archive a webpage. As I kept thinking on the subject I realized it has very significant shortcomings:

- In the utility I am parsing the content of page, finding different kind of urls(

img,css,js) to recursively fetch the static resources in the end I will have the archive of page. But there is more to that in how a page gets rendered. Browsers parses HTML and all the resources to finally render the page. The program we write has to be equally capable or else the archive won't be complete. - Whenever I have been behind a proxy in college campuses I have noticed reCAPTCHA would pop up saying something in line that suspicious activity is noticed from my connection. With this automated archival system, how to avoid it? I have a feeling that if the system triggers the reCAPTCHA activation, for automated browsing of a page, the system will be locked out and won't have any relevant content of the page. My concern is, I don't know enough on how and when captcha triggers, how to avoid or handle them and have guaranteed access to the content of the page.

- Handling paywalls, or the access to limited articles in a certain time, or banners that obfuscate the content with login screen.

I am really slow in executing and experimenting around these questions. And I feel unless I start working on it, I will keep collecting these questions and add more inertia to taking a crack at the problem.